自然语言处理

写在前面

参考书籍

Aston Zhang, Zachary C. Lipton, Mu Li, Alexander J. Smola. Dive into Deep Learning. 2020.

简介 - Dive-into-DL-PyTorch (tangshusen.me)

自然语言处理

source code: NJU-ymhui/DeepLearning: Deep Learning with pytorch (github.com)

use git to clone: https://github.com/NJU-ymhui/DeepLearning.git

/NLP

word2vec_dataset.py word2vec_pretraining.py fasttext.py similarity_compare.py BERT.py BERT_pretraining_dataset.py

词嵌入

在自然语言系统中,词是意义的基本单位。下面介绍一个新的概念:词向量,这是用于表示单词意义的向量。那么现在还需要将每个词映射到对应的词向量,这项技术就是词嵌入。

下面将介绍一些将词映射到向量的相关技术。

为什么不再使用独热向量

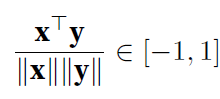

在之前做机器翻译时曾尝试用过独热编码的向量,但现在来看,这并不是一个好的选择。一个重要原因是独热向量不能表达不同词之间的相似度,比如经常使用的余弦相似度,它对两个向量x, y使用余弦表示两个向量之间的相似度

然而根据独热向量的定义可以得知,任意两个不同词的独热向量的余弦为0,即独热向量不能编码词之间的相似性。

自监督的word2vec

- 跳元模型

- 连续词袋模型

跳元模型Skip-Gram

详见15.1. Skip-Gram — Dive into Deep Learning 1.0.3 documentation (d2l.ai)

连续词袋CBOW

详见15.1. CBOW — Dive into Deep Learning 1.0.3 documentation (d2l.ai)

近似训练

负采样

详见15.2. Negative-Sampling — Dive into Deep Learning 1.0.3 documentation (d2l.ai)

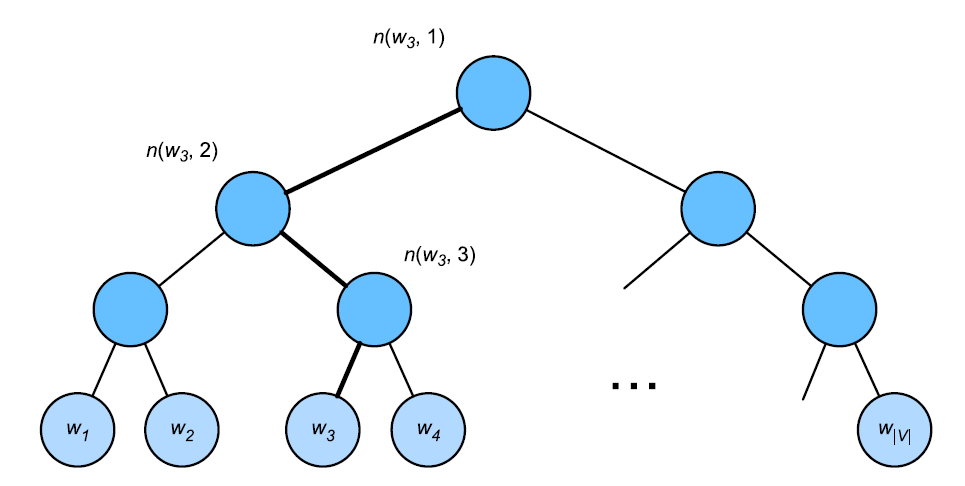

层序Softmax

层序softmax是另一种近似训练的方法,使用二叉树,其中树的每个叶节点表示词表V中的一个词。

其原理,详见15.2. Softmax — Dive into Deep Learning 1.0.3 documentation (d2l.ai)

预训练词嵌入的数据集

直接上代码。

code

import os |

output

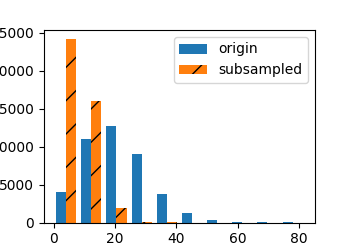

length of sentence: 42069 |

"the" count before subsample: 50770 |

预训练word2vec

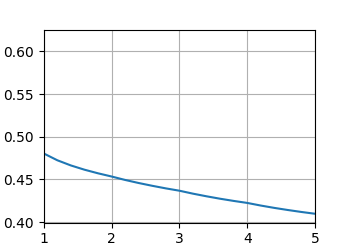

继续实现跳元语法模型,然后在PTB数据集上使用负采样预训练word2vec。

code

import math |

output

Parameter embedding_weight: torch.Size([20, 4]), dtype = torch.float32 |

cosine sim = 0.722: microprocessor |

全局向量的词嵌入GloVe

上下文窗口内的词共现可以携带丰富的语义信息。比如“固体”更可能与“冰”一同出现而不是“水”,反观“蒸汽”则更可能和“水”一起出现。此外,可以预先计算此类共现的全局语料库统计数据:这可以提高训练效率。为了利用整个语料库中的统计信息进行词嵌入,使用全局语料库统计。

模型见15.5. GloVe Model— Dive into Deep Learning 1.0.3 documentation (d2l.ai)

子词嵌入

有些单词可以被视作其他单词的变种,比如dog和dogs,help和helps、helping,又比如boy与boyfriend的关系和girl与girlfriend的关系一样。这种多个词之间潜在的联系有时会传达相当有用的信息,并在预测分析时提供关键的上下文;遗憾的是,word2vec和GloVe都没有对词的内部结构进行讨论。

fastText模型

原理见15.6. fastText — Dive into Deep Learning 1.0.3 documentation (d2l.ai)

字节对编码

在fastText中,所有提取的子词都必须是指定的长度,例如3到6,因此词表大小不能预定义。为了在固定大小的词表中允许可变长度的子词,我们可以应用一种称为字节对编码(Byte Pair Encoding,BPE)的压缩算法来提取子词。

字节对编码执行训练数据集的统计分析,以发现单词内的公共符号,诸如任意长度的连续字符。从长度为1的符号开始,字节对编码迭代地合并最频繁的连续符号对以产生新的更长的符号。

code

import collections |

output

token_freq: |

词的相似性和类比任务

在大型语料库上预先训练的词向量可以应用于下游的自然语言处理任务。下面将展示大型语料库中预训练词向量的语义,即将预训练词向量应用到词的相似性和类比任务中。

code

import os |

output

size of vocab: |

Transformers的双向编码器表示(BERT)

介绍到现在,上文提到的所有词嵌入模型都是上下文无关的,而现在,我们引入上下文敏感模型。

上下文无关/敏感模型

考虑之前使用的那些词嵌入模型word2vec和GloVe,它们都将相同的预训练向量分配给同一个词,而不考虑词的上下文(如果有的话)。形式上,任何词元x的上下文无关表示是函数f(x),其仅将x作为其输入。考虑到自然语言中丰富的多义现象和复杂的语义,上下文无关表示具有明显的局限性。因为同一个词在不同的上下文中可能表达截然不同的意思。

这推动了上下文敏感模型的出现,其中词的表征取决于上下文,即词元x的上下文敏感表示函数f(x, c(x)),取决于x及其上下文c(x)。

特定任务/不可知任务

现有的各种自然语言处理的解决方案都依赖于一个特定于任务的架构,然而为每一个任务设计一个特定的架构是一件很困难的事情。GPT模型为上下文的敏感表示设计了通用的任务无关模型;GPT建立在Transformer解码器的基础上,预训练了一个用于表示文本序列的语言模型。当将GPT应用于下游任务时,语言模型的输出将被送到一个附加的线性输出层,以预测任务的标签。

然而,由于语言模型的自回归特性,GPT只能向前看(从左到右)。在“i went to the bank to deposit cash”(我去银行存现金)和“i went to the bank to sit down”(我去河岸边坐下)的上下文中,由于“bank”对其左边的上下文敏感,GPT将返回“bank”的相同表示,尽管它有不同的含义。

BERT

将两个最好的(上下文敏感模型和不可知任务)结合起来。

code

import torch |

output

shape of encoded_X: |

用于预训练BERT的数据集

为了训练BERT模型,我们需要以理想的格式生成数据集,以便进行两个预训练任务:遮蔽语言模型和下一句预测。根据经验,在定制的数据集上对BERT进行预训练更有效,为了方便演示,使用较小的语料库WikiText-2

与PTB数据集相比,WikiText-2

- 保留了原来的标点符号,适合于下一句预测;

- 保留了原来的大小写和数字;

- 大了一倍以上。

code

import os |

output

TBD |

预训练BERT

TBD